The AI Oracle Fallacy

Why Smart Companies Are Making Dumb Decisions

Innovation teams across industries are falling into the same trap. They're implementing AI-driven strategies, launching AI-recommended products, and pursuing AI-identified opportunities with impressive speed and sophistication. Yet many of these initiatives fail - not because the AI was wrong, but because no one knew how to determine when it was right.

The Hidden Problem

The issue isn't AI capability - modern systems produce remarkably insightful analyses and recommendations. The problem is validation. When AI suggests a new market opportunity, recommends a technology stack, or identifies customer behavior patterns, how do you know whether to trust it?

We regularly observe organizations treating AI outputs like oracle pronouncements: authoritative, complete, and beyond questioning. Individual team members fall into this trap, and organizations often fail to recognize the pattern. This creates a dangerous dependency where critical business decisions rest on black box processes that no one fully understands.

The pattern is predictable: AI provides compelling analysis, teams build strategies around it, projects launch with confidence, and then reality provides unwelcome surprises. The AI wasn't necessarily wrong - but teams didn't know how to determine when and where it was right.

A Lesson from Physics Class

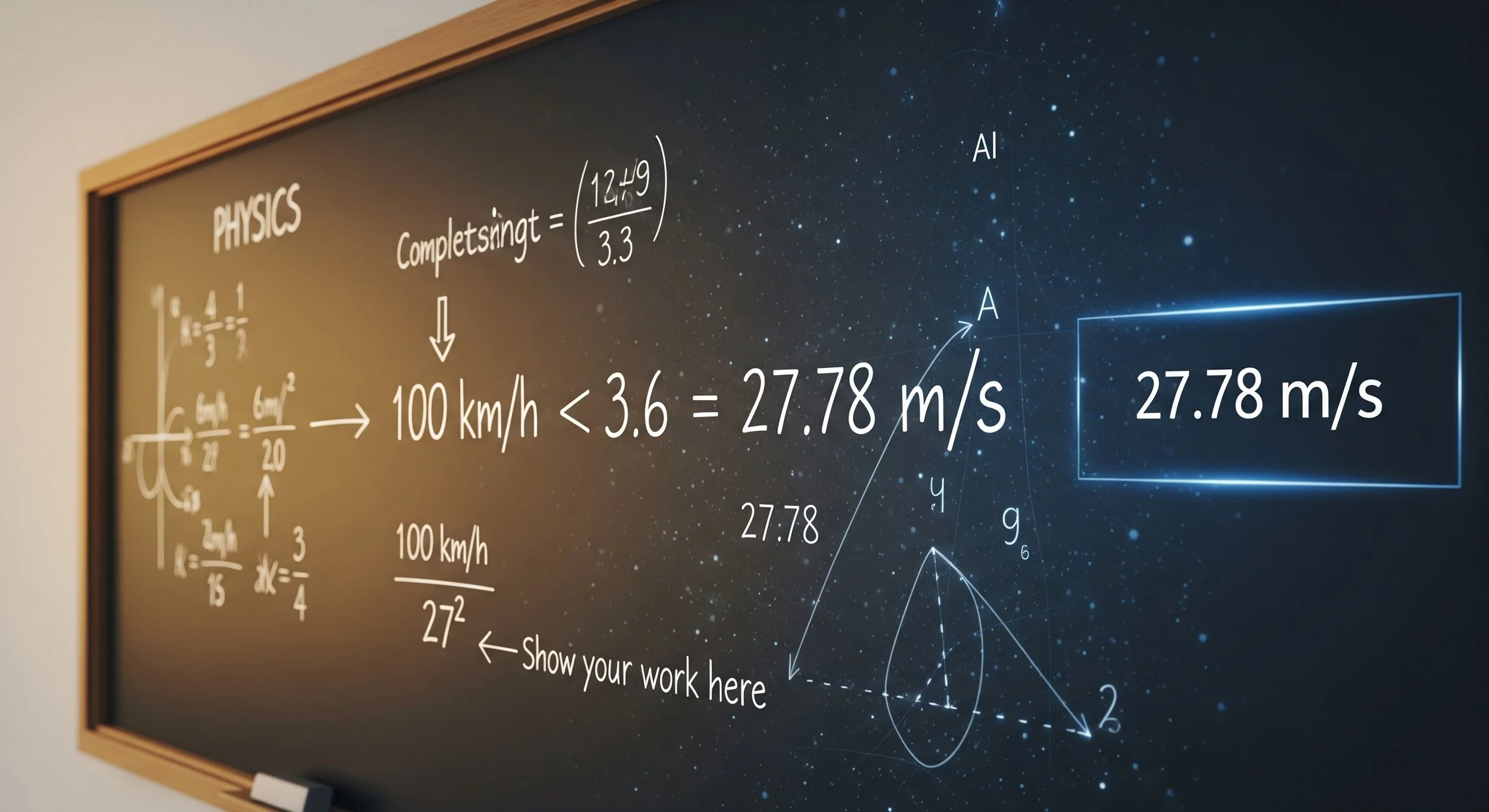

The solution came to me through an unlikely source: my high school physics teacher, Mr. Pulles. He had a maddening habit of rejecting correct answers. "100 km/h is not equal to 27.78 m/s," he'd tell me, "I need to see how you got there."

I was essentially his black box problem - producing right answers without revealing the process. Eventually, I understood his point: the process matters more than the result, because the process determines when and where the result is valid.

Years later, working in business intelligence, I realized Mr. Pulles had been teaching phenomenal thinking - the ability to work with outputs as phenomena to be understood and validated, not simply consumed.

The Phenomenal Thinking Solution

Innovation teams need systematic approaches to AI validation. This doesn't mean becoming AI experts or abandoning AI tools. It means developing what I call "phenomenal thinking" - treating AI as a powerful phenomenon to be understood rather than an oracle to be obeyed.

The framework is straightforward:

Replicate the reasoning: Can you arrive at similar conclusions through different methods? If AI identifies a market opportunity, validate it through traditional research. If it recommends a technical approach, ensure it aligns with engineering principles. Multiple paths to the same insight build confidence.

Apply the insight: Can you use this output to predict or solve related problems? Valuable AI recommendations should have explanatory power beyond the immediate question. Test whether the AI's logic holds across similar situations.

Limit the application: Under what circumstances does this approach break down? Every AI system has boundaries. Understanding these limits prevents costly misapplications and helps teams know when to seek alternative approaches.

Implementation for Innovation Teams

Start with validation protocols: Build AI validation into your innovation processes. Before acting on AI recommendations, require teams to validate through alternative methods and test the boundaries of applicability.

Develop cross-functional perspective: Ensure teams can approach AI outputs from multiple angles - technical, market, operational, and strategic. Different viewpoints reveal different limitations and opportunities.

Cultivate productive skepticism: Create a culture where questioning AI outputs is seen as due diligence, not obstruction. The goal isn't to undermine AI but to understand when and how to trust it.

Document the reasoning: Just as Mr. Pulles required students to show their work, require teams to document how they validated AI outputs. This creates institutional knowledge about when specific AI approaches work well and when they don't.

The Strategic Advantage

Organizations that master AI validation will make better innovation decisions, reduce costly false positives, and build more robust strategies. They'll move faster than companies that avoid AI, but more safely than companies that trust it blindly.

The competitive advantage goes to teams that can harness AI's analytical power while maintaining human judgment about its limitations. They understand that the most dangerous phrase in innovation isn't "We don't know" - it's "The AI said so."

Mr. Pulles was preparing students for a world where showing your work matters more than getting the right answer. For innovation teams, that world has arrived.

This explores the practical applications of phenomenal thinking for innovation teams. Read the full article for deeper insights into developing these capabilities.